18. The paradox of second innings wickets

Measured in different ways, teams batting second lose more or less wickets than teams batting first

It started as an attempt to measure risk.

In limited overs cricket, teams have two resources to play with - wickets left and balls remaining. In contrast, balls are so abundant in Test cricket that in most games teams are playing under only one resource constraint - the number of wickets left. Consequently, the approach in Test cricket is to put a high price on the wicket, and preserve the wickets and hope to score more runs.

In the initial couple of decades of ODI cricket, teams approached the game in a similar one-resource manner. There was a focus on preserving wickets. Four runs per over got you to a good score. And that changed through the 1990s, as teams figured that limited balls meant they could take more risk with their wickets. Scores shot up through that decade.

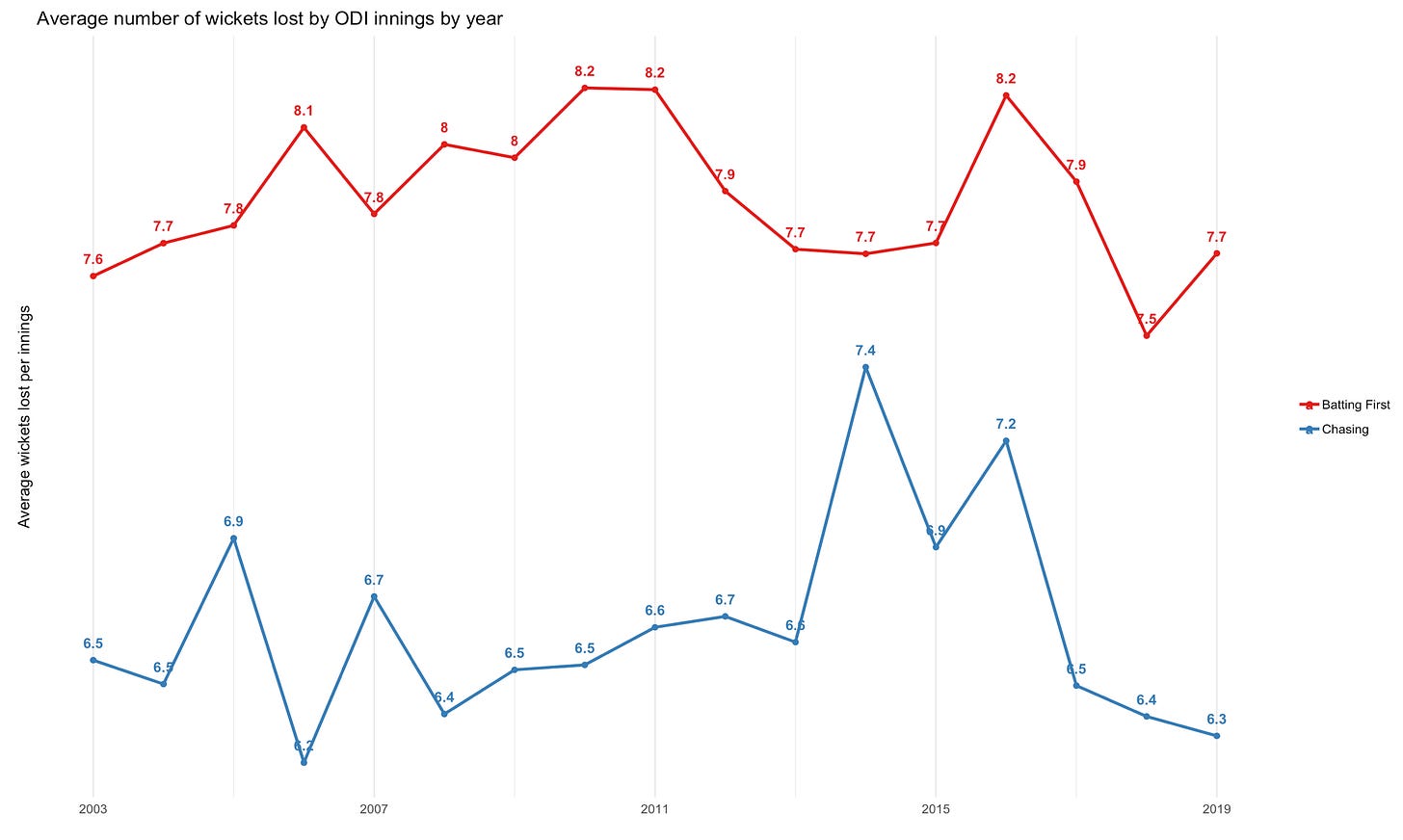

So this morning I was trying to see if there is a pattern in the total number of wickets lost by a team in an ODI innings. First, I thought I would measure it using the total number of wickets lost at the end of an innings.

What should strike you here is that chasing teams have always lost, on average, fewer wickets than the team batting first. There is no real pattern in terms of time - apart from maybe a decline in the number of wickets lost by the team batting first in the 2011-15 period. What stands out is that teams lose one more wicket when batting first than they do while chasing.

In an attempt to dig deeper into why teams lose more wickets while chasing, I thought I should look at when teams lose their wickets, so I calculated the average number of wickets lost at the end of each ball in an innings, and plotted them (this is for all ODIs since mid-2001). The result was surprising.

At any point of time in an innings, a team batting second is likely to have lost more wickets (on average) than a team batting first. This is the paradox in the title of this post - if you look at the number of wickets lost at the end of the innings, teams lose more wickets batting first. If you look at the progression of wickets, teams lose more wickets batting second!

This is not a difficult paradox, however, and can be explained by a simple selection bias. Not all chases last the full fifty overs. While a few end early because the team is all out, a lot of chases end early because the target is achieved. So when we look at the average wickets lost at the end of (say) the 45th over, we are only looking at the limited set of games where the chase lasted 45 or more overs).

Half of all run chases in ODI cricket since 2002 have ended in the 44th over. And even though a team batting second loses on average more wickets at the end of 44 overs than the team batting first, it loses less wickets than a team batting first would have lost in 50 overs.

This “feature” - that not all second innings last the distance - is something we need to take care of when we’re doing any data analysis that involves first or second innings. For the way teams bat in the second innings is highly dependent on the target.

When the target is totally out of reach, for example, what the team does doesn’t really matter (except maybe for tie-breaker (NRR) reasons). When the target is just about out of reach, you can expect a team to bat with higher risk. Small targets might mean the team bowling has “given up” leading to anomalous data.

In other words, there is a reason why Duckworth Lewis is calibrated exclusively on data from teams batting first.

Now you might be feeling unsettled that we still don’t have much insight on the premise we started off with - are teams taking more or less risk over time, and do they take more risk when batting first or second? I know this might be very small consolation for you, but I have no idea either! :)